Common test techniques for ESL teachers

What are test techniques?

Quite simply, test techniques are means of eliciting behaviour from candidates that will tell us about their language abilities. What we need are techniques that:

• will elicit behaviour which is a reliable and valid indicator of the ability in which we are interested;

• will elicit behaviour which can be reliably scored;

• are as economical of time and effort as possible;

• will have a beneficial backwash effect, where this is relevant.

This blog shares common techniques that can be used to test a variety of abilities, including reading, listening, grammar and vocabulary. We begin with an examination of the multiple choice technique and then go on to look at techniques that require the test-taker to construct a response (rather than just select one from a number provided by the test-maker).

Multiple choice items

Multiple choice items take many forms, but their basic structure is as follows. There is a stem:

Ashley has been here half an hour. and a number of options – one of which is correct, the others being distractors:

A. during

B. for

C. while

D. since

It is the candidate’s task to identify the correct or most appropriate option (in this case B). Perhaps the most obvious advantage of multiple choice is that scoring can be perfectly reliable. Scoring should also be rapid and economical. A further considerable advantage is that, since in order to respond the candidate has only to make a mark on the paper or, on a computer, choose from a drop-down menu, it is possible to include more items than would otherwise be possible in a given period of time. This is likely to make for greater test reliability. Finally, it allows the testing of receptive skills without requiring the test-taker to produce written or spoken language. The advantages of the multiple choice technique were so highly regarded at one time that it almost seemed that it was the only way to test. While many laymen have always been sceptical of what could be achieved through multiple choice testing, it is only fairly recently that the technique’s limitations have been more generally recognised by professional testers. The difficulties with multiple choice are as follows.

The technique tests only recognition knowledge

If there is a lack of fit between at least some candidates’ productive and receptive skills, then performance on a multiple choice test may give a quite inaccurate picture of those candidates’ ability. A multiple choice grammar test score, for example, may be a poor indicator of someone’s ability to use grammatical structures. The person who can identify the correct response in the item above may not be able to produce the correct form when speaking or writing. This is in part a question of construct validity; whether or not grammatical knowledge of the kind that can be demonstrated in a multiple choice test underlies the productive use of grammar. Even if it does, there is still a gap to be bridged between knowledge and use; if use is what we are interested in, that gap will mean that test scores are at best giving incomplete information.

Guessing may have a considerable but unknowable effect on test scores

The chance of guessing the correct answer in a three-option multiple choice item is one in three, or roughly 33 percent. On average we would expect someone to score 33 on a 100-item test purely by guess-work. We would expect some people to score fewer than that by guessing, others to score more. The trouble is that we can never know what part of any particular individual’s score has come about through guessing. Attempts are sometimes made to estimate the contribution of guessing by assuming that all incorrect responses are the result of guessing, and by further assuming that the individual has had average luck in guessing. Scores are then reduced by the number of points the individual is estimated to have obtained by guessing. However, neither assumption is necessarily correct, and we cannot know that the revised score is the same as (or very close to) the one an individual would have obtained without guessing. While other testing methods may also involve guessing, we would normally expect the effect to be much less, since candidates will usually not have a restricted number of responses presented to them (with the information that one of them is correct). If multiple choice is to be used, every effort should be made to have at least four options (in order to reduce the effect of guessing). It is important that all of the distractors should be chosen by a significant number of testtakers who do not have the knowledge or ability being tested. If there are four options but only a very small proportion of candidates choose one of the distractors, the item is effectively only a three-option item. Successful guessing can be reduced by using items with five options, of which two correct answers are to be chosen by test-takers. For example:

If I had chosen a different career, __________ more money.

a. I’ve made

b. I’d have made

c. I’ll be making

d. I’d be making

e. I’m making

The item above is only marked as correct if the test-taker chooses both correct options (in this example, options b and d). Since, logically, guessing will be less effective than if only one correct option needs to be identified, this type of item would appear to have more validity than a traditional item with only one correct option. A drawback to this technique, though, is that items with two correct options can be more difficult to write and indeed, depending on the language point being tested, will sometimes be impossible to create.

The technique severely restricts what can be tested

The basic problem here is that multiple choice items require distractors, and distractors are not always available. In a grammar test, it may not be possible to find three or four plausible alternatives to the correct structure. The result is often that the command of what may be an important structure is simply not tested. An example would be the distinction in English between the past simple and the present perfect. For learners at a certain level of ability, in a given linguistic context, there are no other alternatives that are likely to distract. The argument that this must be a difficulty for any item that attempts to test for this distinction is difficult to sustain, since other items that do not overtly present a choice may elicit the candidate’s usual behaviour, without the candidate resorting to guessing. In other words, ‘constructed response items’, where students are required to supply their own answer, allow for a greater range of structures to be tested.

It is very difficult to write successful items

A further problem with multiple choice is that, even where items are possible, good ones are extremely difficult to write. Professional test writers reckon to have to write many more multiple choice items than they actually need for a test, and it is only after trialling and statistical analysis of performance on the items that they can recognise the ones that are usable. It is our experience that multiple choice tests that are produced for use within institutions are often shot through with faults. Common amongst these are: more than one correct answer; no correct answer; there are clues in the options as to which is correct (for example, the correct option may be different in length from the others); ineffective distractors. The amount of work and expertise needed to prepare good multiple choice tests is so great that, even if one ignored other problems associated with the technique, one would not wish to recommend it for regular achievement testing (where the same test is not used repeatedly) within institutions. Savings in time for administration and scoring will be outweighed by the time spent on successful test preparation. It is true that the development and use of item banks, from which a selection can be made for particular versions of a test, makes the effort more worthwhile, but great demands are still made on time and expertise.

Backwash may be harmful

It should hardly be necessary to point out that where a test that is important to students is multiple choice in nature, there is a danger that practice for the test will have a harmful effect on learning and teaching. Practice at multiple choice items (especially when – as can happen – as much attention is paid to improving one’s educated guessing as to the content of the items) will not usually be the best way for students to improve their command of a language.

Cheating may be facilitated

The fact that the responses on a multiple choice test (a, b, c, d) are so simple makes them easy to communicate to other candidates non-verbally. Some defence against this is to have at least two versions of the test, the only difference between them being the order in which the options are presented. All in all, the multiple choice technique is best suited to relatively infrequent testing of large numbers of candidates. This is not to say that there should be no multiple choice items in tests produced regularly within institutions. In setting a reading comprehension test, for example, there may be certain tasks that lend themselves very readily to the multiple choice format, with obvious distractors presenting themselves in the text. There are real-life tasks (say, a shop assistant identifying which one of four dresses a customer is describing) which are essentially multiple choice. The simulation in a test of such a situation would seem to be perfectly appropriate. What the reader is being urged to avoid is the excessive, indiscriminate and potentially harmful use of the technique.

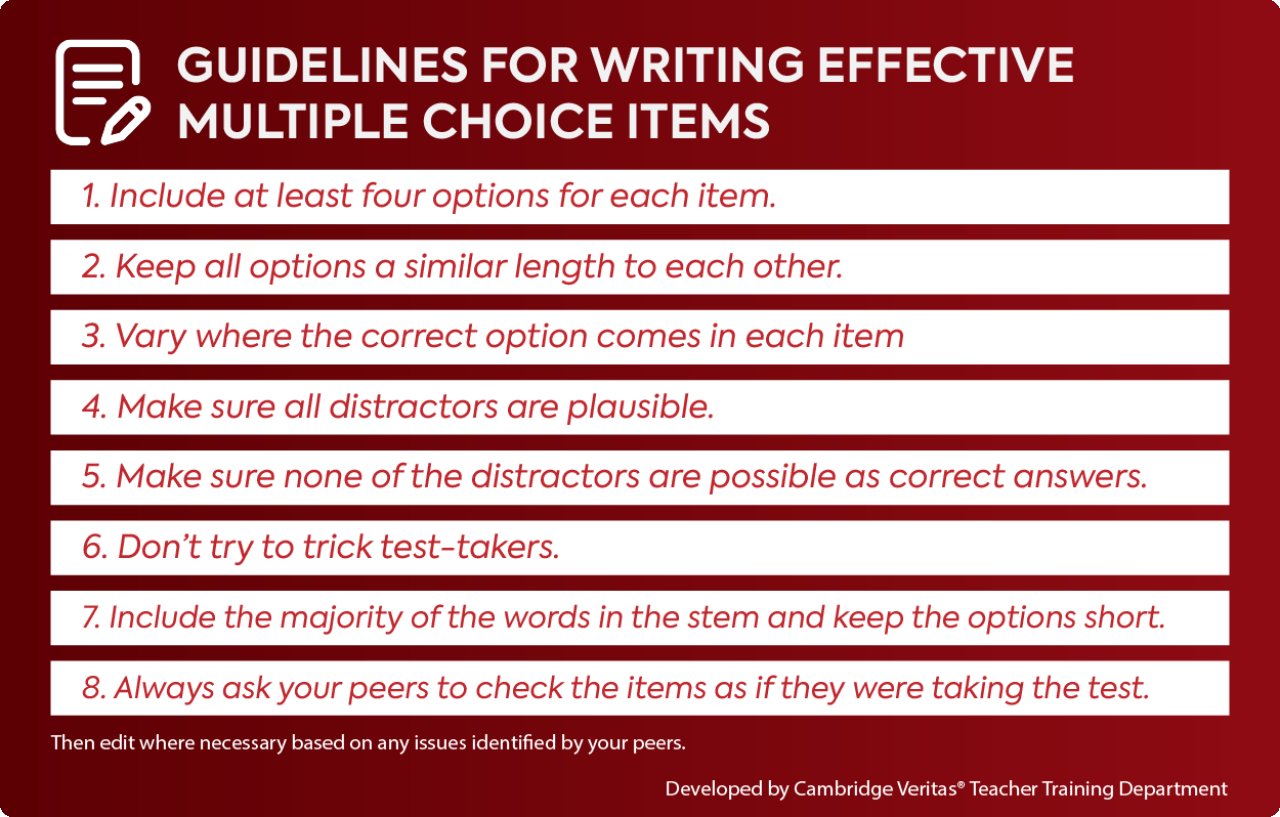

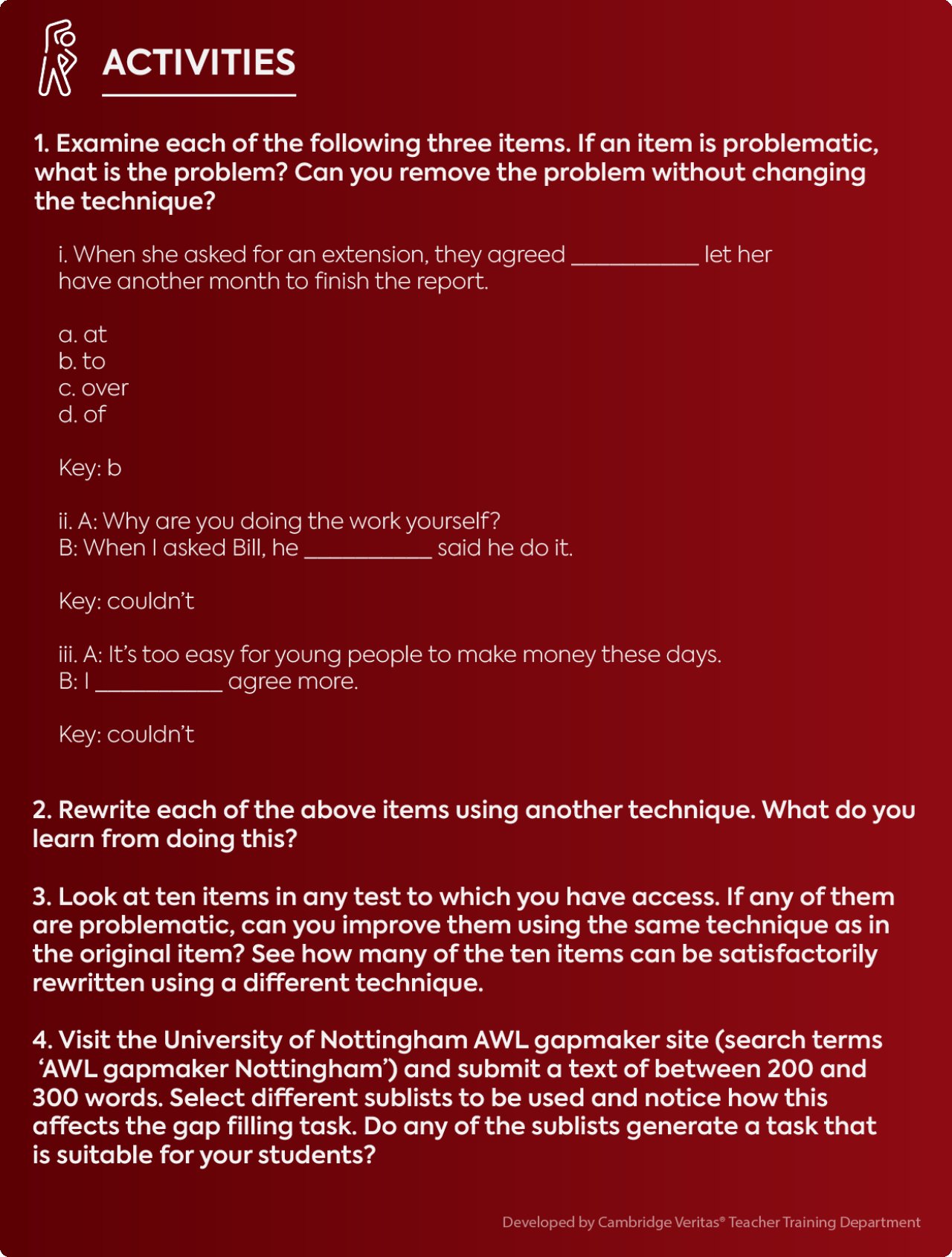

Having identified problems with multiple choice items, we have to recognise that teachers are often required to write them. With this in mind, we include here a set of guidelines to help avoid the most common pitfalls. Teachers can use this as a checklist, while always bearing in mind the various issues with this technique as described in this blog.

Yes/No and True/False items

Items in which the test-taker has merely to choose between Yes and No, or between True and False, are effectively multiple choice items with only two options. The attraction of this technique is the speed at which they can be written and answered. However, the obvious weakness of such items is that the test-taker has a 50 percent chance of choosing the correct response by chance alone. In our view, there is no place for items of this kind in a formal test, although they may well have a use as part of informal, formative assessment where the accuracy of the results is not critical. True/False items are sometimes modified by requiring test-takers to give a reason for their choice. However, this extra requirement is problematic, first because it is adding what is a potentially difficult writing task when writing is not meant to be tested (validity problem), and secondly because the responses are often difficult to score (reliability and validity problem). Items of this kind may be improved slightly by requiring candidates to justify their choice of Yes or No by identifying a phrase or sentence in the text which supports their choice (by underlining or copying). This clearly removes the potentially difficult writing task, but in practice it is often difficult to specify all acceptable responses. For example, there may be more than one sentence offering support.

Short-answer items

Items in which the test-taker has to provide a short answer are common, particularly in listening and reading tests. Examples:

i. What does it in the last sentence refer to?

ii. How old was Harry Potter when he started doing magic?

iii. Why was Harry unhappy?

Advantages of short-answer items over multiple choice are that:

• guessing will (or should) contribute less to test scores;

• the technique is not restricted by the need for distractors (though there have to be potential alternative responses);

• cheating is likely to be more difficult;

• though great care must still be taken, items should be easier to write.

Disadvantages are:

• responses may take longer and so reduce the possible number of items, which in turn has the potential to reduce the test’s reliability;

• the test-taker has to produce language in order to respond;

• scoring may be invalid or unreliable, if judgement is required;

• scoring may take longer.

The first two of these disadvantages may not be significant if the required response is really short (and at least the test-takers do not have to ponder four options, three of which have been designed to distract them). The next two can be overcome by making the required response unique (i.e. there is only one possible answer) and to be found in the text (or to require very simple language). Looking at the examples above, without needing to see the text, we can see that the correct response to Item i. should be unique and found in the text. The same could be true of Item ii. Item iii., however, may cause problems (which can be solved by using gap filling, below). We believe that short-answer questions have a role to play in serious language testing. Only when testing has to be carried out on a very large scale would we think of dismissing short-answer questions as a possible technique because of the time taken to score. With the increased use of computers in testing (in TOEFL®, for example), where written responses can be scored reliably and quickly, there is no reason for short-answer items not to have a place in the very largest testing programmes.

Gap filling items

Items in which test-takers have to fill a gap with a word are also common. An example for a reading test might be:

Harry was unhappy because his parents __________ when he was __________ young and he was at school.

From this example, assuming that the missing words (let us say they are died and bullied) can be found in the text, it can be seen that the problem of the third short-answer item has been overcome. Gap filling items for reading or listening work best if the missing words are to be found in the text or are straightforward, high frequency words which should not present spelling problems.

Gap filling items can also work well in tests of grammar and vocabulary. Examples:

He asked me for money, __________ though he knows I earn a lot less than him.

Our son just failed another exam. He really needs to pull his __________ up.

But it does not work well where the grammatical element to be tested is discontinuous, and so needs more than one gap. An example would be where one wants to see if the test-taker can provide the past continuous appropriately. None of the following is satisfactory:

i. While they __________ watching television, there was a sudden bang outside.

ii. While they were __________ television, there was a sudden bang outside.

iii. While they __________ __________ television, there was a sudden bang outside.

In the first two cases, alternative structures which the test-taker might have naturally used (such as the simple past) are excluded. The same is true in the third case too, unless the test-taker inserted an adverb and wrote, for example, quietly watched, which is an unlikely response. In all three cases, there is too strong a clue as to the structure which is needed.

Gap filling does not always work well for grammar or vocabulary items where minor or subtle differences of meaning are concerned, as the following items demonstrate.

i. A: What will he do?

B: I think he resign.

A variety of modal verbs (will, may, might, could, etc.) can fill the gap satisfactorily.

Providing context can help:

ii. A: I wonder who that is.

B: It be the doctor.

This item has the same problem as the previous one. But adding:

A: How can you be so certain?

means that the gap must be filled with a modal expressing certainty (must). But even with the added context, will may be another possibility. When the gap filling technique is used, it is essential that test-takers are told very clearly and firmly that only one word can be put in each gap. They should also be told whether contractions (I’m, isn’t, it’s, etc.) count as one word. This is particularly important if the test is to be computer marked, as there will be no possibility of marker discretion. (In our experience, counting contractions as one word is advisable, as it allows greater flexibility in item construction.)

Gap filling is a valuable technique. It has the advantages of the shortanswer technique, but the greater control it exercises over the test-takers means that it does not call for significant productive skills. There is no reason why the scoring of gap filling should not be highly reliable, provided that it is carried out with a carefully constructed key on which the scorers can rely completely (and not have to use their individual judgement).

One recent development is the use of corpora and computer algorithms to assist with gap filling item writing. Some programs create items based on a keyword which a user submits, while others will take a text and automatically replace certain words with gaps. The choice of which words are to be gapped is of course crucial. One program run by the University of Nottingham chooses words based on different levels of the Academic Word List, thereby allowing users to vary the difficulty of the task. While these programs undoubtedly have the potential to be useful tools, their output needs to be scrutinised and modified where necessary before use. However, as algorithms continue to be developed and finely tuned, it will be interesting to see to what extent they can replace human item writers.

FURTHER READING

Heaton (1975) discusses various types of item and gives many examples for analysis by the reader. Amini and Ibrahim-González (2012) suggest the backwash effects of the multiple choice technique are not as beneficial as the cloze technique. Their study focuses specifically on vocabulary acquisition. Currie and Chiraramanee’s research (2010) casts further doubt on the validity of the multiple choice technique, particularly in comparison to constructed response items. Smith et al. (2010) gives a detailed description and evaluation of a corpus-driven gap filling system, TEDDCLOG.

.png)